- May 29, 2020

- Posted by: admin

- Categories:

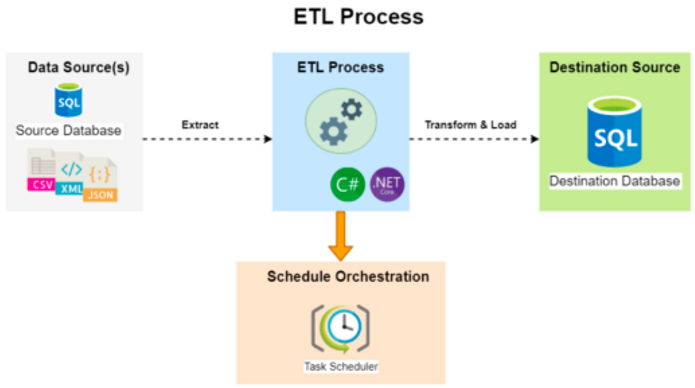

ETL is the process of copying data from one or more sources into a destination system which represents the data differently from the source(s) or in a different context than the source(s). At its most basic, the ETL process encompasses data extraction, transformation, and loading.

Data extraction involves extracting data from homogeneous or heterogeneous sources; data transformation processes data by data cleaning and transforming them into a proper storage format/structure for the purposes of querying and analysis. Finally, data loading describes the insertion of data into the final target database such as an operational data store, a data mart, data lake or a data warehouse.

Since the data extraction takes time, it is common to execute the three phases in pipeline. While the data is being extracted, another transformation process executes while processing the data already received and prepares it for loading while the data loading begins without waiting for the completion of the previous phases.

As previously state, since the data extraction takes time, the ETL process(es) will run on a schedule task basis and in time frames that are outside of working hours (between the boundaries of idle time frames).

ETL Process – Critical ETL components

- Auditing and logging: Detailed logging within the ETL pipeline to ensure that data can be audited after it’s loaded and that errors can be debugged.

- Fault tolerance: In any system, problems inevitably occur. ETL systems need to be able to recover gracefully, making sure that data can make it from one end of the pipeline to the other even when the first run encounters problems.

- Notification support: Build in notification systems to alert when problems encountered through the ETL process pipeline.

- Scalability: As your company grows, so will your data volume. All components of an ETL process should scale to support arbitrarily large throughput.

- Accuracy: Data cannot be dropped or changed in a way that corrupts its meaning. Every data point should be auditable at every stage in your process.

Following a high-level diagram that depicts the ETL process pipeline: